Wednesday, March 08, 2006

Immortal Styrofoam Meets its Enemy

There's an old joke that if you were reincarnated, you might want to come back as a Styrofoam cup.

Why? Because they last forever. Ba-dum-bum.

Despite being made 95 percent of air, Styrofoam's manufactured immortality has posed a problem for recycling efforts. More than 3 million tons of the durable material is produced every year in the United States, according to the U.S. Environmental Protection Agency. Very little of it is recycled.

Help may come from bacteria that have been found to eat Styrofoam and turn it into useable plastic. This is the stuff recycling dreams are made of: Yesterday's cup could become tomorrow's plastic spoon.

Kevin O’Connor of University College Dublin and his colleagues heated polystyrene foam, the generic name for Styrofoam, to convert it to styrene oil. The natural form of styrene is in real peanuts, strawberries and a good steak. A synthetic form is used in car parts and electronic components.

Anyway, the scientists fed this styrene oil to the soil bacteria Pseudomonas putida, which converted it into biodegradable plastic known as PHA (polyhydroxyalkanoates).

PHA can be used to make plastic forks and packaging film. It is resistant to heat, grease and oil. It also lasts a long time. But unlike Styrofoam, PHA biodegrades in soil and water.

The process will be detailed in the April 1 issue of the American Chemical Society journal Environmental Science & Technology.

- Bacteria Eat Pollution, Generate Electricity

- Make-it-all Machine for Do-it-yourself Homeowners

- Waste Not: Energy from Garbage and Sewage

- The Solar Powered Purse

Coffee mixes badly with certain genes

People carrying a common variation in a certain gene could be worsening their risk of a heart attack simply by drinking several cups of coffee per day.

A possible link between coffee and heart disease has been tested before, but the results have been controversial. One reason is that coffee addicts are more likely to smoke and indulge in other unhealthy behaviours; another is that coffee contains a mix of caffeine and other chemicals, so it is unclear what provokes the body.

In the new study, researchers specifically tested whether caffeine in coffee could promote heart problems by examining a gene called CYP1A2. The gene, which codes for an enzyme that helps to break down caffeine, comes in two flavours: one version, known as CYP1A2*1F, metabolizes caffeine more slowly than the other.

People who carry one or two copies of the 'slow' gene are slow metabolizers and take longer to rid the body of caffeine than those who carry two copies of the 'fast' gene.

Ahmed El-Sohemy of the University of Toronto, Canada, and his team examined more than 2,000 people in Costa Rica who had suffered, but survived, a heart attack, and an equivalent group of healthy individuals. A questionnaire revealed that quaffing coffee boosted the risk of a heart attack in those who had genes making them slow metabolizers.

Slow metabolizers who drank two to three 250-millilitre cups of coffee each day were 36% more likely to have suffered a heart attack than single-cup drinkers. And those who drank four or more cups were 64% more likely to have been struck. The risk was greatest in those below the age of 60.

By contrast, one to three cups seemed to protect those individuals whose genes made them fast metabolizers. "The results are clear and quite striking," says El-Sohemy. Researchers do not know exactly how caffeine could be affecting the heart, but one idea is that it could affect the ability of blood vessels to expand and contract.

Drop the pick-me-up?

The study suggests that a simple genetic test could identify those who are slow caffeine metabolizers, and that these people could be advised to cut down on their morning pick-me-up.

But both El-Sohemy and other experts say they are not ready to make that recommendation yet. "Other conditions needs to be fulfilled before we can sound the alarm of 'Abandon Starbucks! Young CYP1A2*1F carriers first!'" says Jose Ordovas, an expert in nutrition and genetics at Tufts University, Boston, Massachusetts.

People who consume a lot of coffee could also be more stressed and sleep less, and this could explain their heart problems, Ordovas says.

Coffee culture

To confirm the recent study, experts say that it needs to be repeated in other ethnically and genetically different populations, in which other genes and lifestyle factors could influence how caffeine affects the heart.

Researchers would then need to carry out clinical trials in which they randomly assign a group of individuals to take caffeine or a placebo, and examine whether the caffeine can cut cholesterol or other indicators of heart health.

El-Sohemy notes that people cannot assume that they are slow metabolizers just because they become jittery after one cup of coffee.

The eye-opening effect of caffeine is determined primarily by the way it acts in the nervous system, rather than by how long it lingers in the body. So it is hard to determine slow metabolizers from fast ones just from the way they react to coffee.

source:http://www.nature.com/news/2006/060306/full/060306-9.html

Still Evolving, Human Genes Tell New Story

The genes that show this evolutionary change include some responsible for the senses of taste and smell, digestion, bone structure, skin color and brain function.

Many of these instances of selection may reflect the pressures that came to bear as people abandoned their hunting and gathering way of life for settlement and agriculture, a transition well under way in Europe and East Asia some 5,000 years ago.

Under natural selection, beneficial genes become more common in a population as their owners have more progeny.

Three populations were studied, Africans, East Asians and Europeans. In each, a mostly different set of genes had been favored by natural selection. The selected genes, which affect skin color, hair texture and bone structure, may underlie the present-day differences in racial appearance.

The study of selected genes may help reconstruct many crucial events in the human past. It may also help physical anthropologists explain why people over the world have such a variety of distinctive appearances, even though their genes are on the whole similar, said Dr. Spencer Wells, director of the Genographic Project of the National Geographic Society.

The finding adds substantially to the evidence that human evolution did not grind to a halt in the distant past, as is tacitly assumed by many social scientists. Even evolutionary psychologists, who interpret human behavior in terms of what the brain evolved to do, hold that the work of natural selection in shaping the human mind was completed in the pre-agricultural past, more than 10,000 years ago.

"There is ample evidence that selection has been a major driving point in our evolution during the last 10,000 years, and there is no reason to suppose that it has stopped," said Jonathan Pritchard, a population geneticist at the University of Chicago who headed the study.

Dr. Pritchard and his colleagues, Benjamin Voight, Sridhar Kudaravalli and Xiaoquan Wen, report their findings in today's issue of PLOS-Biology.

Their data is based on DNA changes in three populations gathered by the HapMap project, which built on the decoding of the human genome in 2003. The data, though collected to help identify variant genes that contribute to disease, also give evidence of evolutionary change.

The fingerprints of natural selection in DNA are hard to recognize. Just a handful of recently selected genes have previously been identified, like those that confer resistance to malaria or the ability to digest lactose in adulthood, an adaptation common in Northern Europeans whose ancestors thrived on cattle milk.

But the authors of the HapMap study released last October found many other regions where selection seemed to have occurred, as did an analysis published in December by Robert K. Moysis of the University of California, Irvine.

Dr. Pritchard's scan of the human genome differs from the previous two because he has developed a statistical test to identify just genes that have started to spread through populations in recent millennia and have not yet become universal, as many advantageous genes eventually do.

The selected genes he has detected fall into a handful of functional categories, as might be expected if people were adapting to specific changes in their environment. Some are genes involved in digesting particular foods like the lactose-digesting gene common in Europeans. Some are genes that mediate taste and smell as well as detoxify plant poisons, perhaps signaling a shift in diet from wild foods to domesticated plants and animals.

Dr. Pritchard estimates that the average point at which the selected genes started to become more common under the pressure of natural selection is 10,800 years ago in the African population and 6,600 years ago in the Asian and European populations.

Dr. Richard G. Klein, a paleoanthropologist at Stanford, said that it was hard to correlate the specific gene changes in the three populations with events in the archaeological record, but that the timing and nature of the changes in the East Asians and Europeans seemed compatible with the shift to agriculture. Rice farming became widespread in China 6,000 to 7,000 years ago, and agriculture reached Europe from the Near East around the same time.

Skeletons similar in form to modern Chinese are hard to find before that period, Dr. Klein said, and there are few European skeletons older than 10,000 years that look like modern Europeans.

That suggests that a change in bone structure occurred in the two populations, perhaps in connection with the shift to agriculture. Dr. Pritchard's team found that several genes associated with embryonic development of the bones had been under selection in East Asians and Europeans, and these could be another sign of the forager-to-farmer transition, Dr. Klein said.

Dr. Wells, of the National Geographic Society, said Dr. Pritchard's results were fascinating and would help anthropologists explain the immense diversity of human populations even though their genes are generally similar. The relative handful of selected genes that Dr. Pritchard's study has pinpointed may hold the answer, he said, adding, "Each gene has a story of some pressure we adapted to."

Dr. Wells is gathering DNA from across the globe to map in finer detail the genetic variation brought to light by the HapMap project.

Dr. Pritchard's list of selected genes also includes five that affect skin color. The selected versions of the genes occur solely in Europeans and are presumably responsible for pale skin. Anthropologists have generally assumed that the first modern humans to arrive in Europe some 45,000 years ago had the dark skin of their African origins, but soon acquired the paler skin needed to admit sunlight for vitamin D synthesis.

The finding of five skin genes selected 6,600 years ago could imply that Europeans acquired their pale skin much more recently. Or, the selected genes may have been a reinforcement of a process established earlier, Dr. Pritchard said.

The five genes show no sign of selective pressure in East Asians.

Because Chinese and Japanese are also pale, Dr. Pritchard said, evolution must have accomplished the same goal in those populations by working through different genes or by changing the same genes — but many thousands of years before, so that the signal of selection is no longer visible to the new test.

Dr. Pritchard also detected selection at work in brain genes, including a group known as microcephaly genes because, when disrupted, they cause people to be born with unusually small brains.

Dr. Bruce Lahn, also of the University of Chicago, theorizes that successive changes in the microcephaly genes may have enabled the brain to enlarge in primate evolution, a process that may have continued in the recent human past.

Last September, Dr. Lahn reported that one microcephaly gene had recently changed in Europeans and another in Europeans and Asians. He predicted that other brain genes would be found to have changed in other populations.

Dr. Pritchard's test did not detect a signal of selection in Dr. Lahn's two genes, but that may just reflect limitations of the test, he and Dr. Lahn said. Dr. Pritchard found one microcephaly gene that had been selected for in Africans and another in Europeans and East Asians. Another brain gene, SNTG1, was under heavy selection in all three populations.

"It seems like a really interesting gene, given our results, but there doesn't seem to be that much known about exactly what it's doing to the brain," Dr. Pritchard said.

Dr. Wells said that it was not surprising the brain had continued to evolve along with other types of genes, but that nothing could be inferred about the nature of the selective pressure until the function of the selected genes was understood.

The four populations analyzed in the HapMap project are the Yoruba of Nigeria, Han Chinese from Beijing, Japanese from Tokyo and a French collection of Utah families of European descent. The populations are assumed to be typical of sub-Saharan Africa, East Asia and Europe, but the representation, though presumably good enough for medical studies, may not be exact.

Dr. Pritchard's test for selection rests on the fact that an advantageous mutation is inherited along with its gene and a large block of DNA in which the gene sits. If the improved gene spreads quickly, the DNA region that includes it will become less diverse across a population because so many people now carry the same sequence of DNA units at that location.

Dr. Pritchard's test measures the difference in DNA diversity between those who carry a new gene and those who do not, and a significantly lesser diversity is taken as a sign of selection. The difference disappears when the improved gene has swept through the entire population, as eventually happens, so the test picks up only new gene variants on their way to becoming universal.

The selected genes turned out to be quite different from one racial group to another. Dr. Pritchard's test identified 206 regions of the genome that are under selection in the Yorubans, 185 regions in East Asians and 188 in Europeans. The few overlaps between races concern genes that could have been spread by migration or else be instances of independent evolution, Dr. Pritchard said.

source:http://www.nytimes.com/2006/03/07/science/07evolve.html?pagewanted=2&ei=5090&en=039ecd5836bc6b0e&ex=1299387600&partner=rssuserland&emc=rss

Crossing borders: Exploring Active Record

07 Mar 2006

The Java™ programming language has had an unprecedented run of success for vendors, customers, and the industry at large. But no programming language is a perfect fit for every job. This article launches a new series by Bruce Tate that looks at ways other languages solve major problems and what those solutions mean to Java developers. He first explores Active Record, the persistence engine behind Ruby on Rails. Active Record bucks many Java conventions, from the typical configuration mechanisms to fundamental architectural choices. The result is a framework that embraces radical compromises and fosters radical productivity.

2005 was in many ways a strange year for me. For the first time in nearly a decade, I began to do serious development in programming languages other than the Java language. While working with a startup, a business partner and I had some incredible success with a quick proof of concept. We were at a crossroads -- should we continue Java development or switch to something radical and new? We had many reasons to stay on the Java path:

- We'd need to learn a new language from scratch.

- The Java programming community was so strong that we'd have trouble getting our customer to accept a switch.

- We would not have the thousands of Java-centric open source projects to choose from.

|

But we didn't dismiss the idea of developing in another language and began to build our application in Ruby on Rails, a Web application framework built on the Ruby language. We were successful beyond our wildest imaginings. Since then, I've been able to split time between Java teaching and development (mostly with Hibernate and Spring), and Ruby teaching and development. I've come to believe that it's critical to learn other approaches and languages from time to time, for these reasons:

- Java is not the perfect language for every problem.

- You can take some new ideas back to your Java programming.

- Other frameworks are shaping the way that Java frameworks are built.

In this article, the first in a series that aims to demonstrate these ideas, you'll look at Active Record, the persistence architecture at the heart of Ruby on Rails. I'll also throw in a discussion of schema migrations.

Active Record: Radically different

When I took Rails out for a test drive, I freely admit my attitude was more than a little arrogant. I didn't think Active Record was up to the job, but I've since learned that it offers everything I need for some problems.

The best way to show you how Active Record handles persistence is to write some code. My examples use the MySQL database, but with minor changes, you can use the code with other databases as well.

By far, the easiest way to use Active Record is through Ruby on Rails. If you want to follow along in code, you need to install Ruby and Rails (see Resources).

Then, you can create a project. Go to the directory where you want Rails to create a new project, and type:

|

Rails creates your project, and you can use Active Record through some nifty Rails tools. The only step that remains is configuring your database. Create a database called people_development and edit the config/database.yml file to look like this (make sure you type in your username and password):

|

You needn't worry about the test and production environments in this article, so that's all of the configuration you need. You're ready to go.

|

In Hibernate, you'd usually begin development by working on your Java objects because Hibernate is a mapping framework. The object model becomes the center of your Hibernate universe. Active Record is a wrapping framework, so you start by creating a database table. The relational schema is the center of your Active Record universe. To create a database table, you can use a GUI or type this script:

|

Creating an Active Record class

Next, create a file called app/models/people.rb. Make it look like this:

|

This Ruby code creates a class called Person with a superclass called ActiveRecord::Base. (For now, assume Base is like a Java class and that ActiveRecord is like a Java package.) Surprisingly, that's all you need to do right now to get a good amount of capability.

Now you can manipulate Person from within the Active Record console. This console lets you use your database-backed objects from within the Ruby interpreter. Type:

|

Now, create a new person. Type these Ruby commands:

|

If you haven't worked with Active Record before, chances are you're seeing something new and interesting. This small example encapsulates two important features: convention over configuration and metaprogramming.

Convention over configuration saves you from tedious repetition by inferring configuration based on names you choose. You didn't need to configure any mapping because you built a database table that followed Rails naming conventions. Here are the main ones:

- Model class names such as

EmailAccountare in CamelCase and are English singulars. - Database table names such as

email_accountsuse underscores between words and are English plurals. - Primary keys uniquely identify rows in relational databases. Active Record uses

idfor primary keys. - Foreign keys join database tables. Active Record uses foreign keys such as

person_idwith an English singular and an_idsuffix.

Convention over configuration gains you some additional speed if you follow Rails conventions, but you can override the conventions. For example, you could have a person that looks like this:

|

So convention over configuration doesn't unnecessarily restrict you, it just rewards you for consistent naming.

Metaprogramming is Active Record's other major contribution. Active Record makes heavy use of Ruby's reflection and metaprogramming capabilities. Metaprogramming is simply writing programs that write or change programs. In this case, the Base class adds attributes to your person class for every column in the database. You didn't need to write or generate any code, but you could use person.first_name, person.last_name, and person.email. You'll see more extensive metaprogramming as you read on.

Active Record also includes some features that many Java frameworks don't have, such as model-based validation. Model-based validation lets you make sure the data within your database stays consistent. Change person.rb to look like this:

|

From the console, load person (because it's changed) and type these Ruby commands:

|

Ruby returns false. You can see the error message for any Ruby property:

|

|

So far, you've seen capabilities that you don't find in many Java frameworks, but you might not be convinced yet. After all, the hardest part of database application programming is often managing relationships. I'll show you how Active Record can help. Create another table called addresses:

|

You've followed the Rails convention for your primary and foreign keys, so you'll get the configuration for free. Now change person.rb to support the address relationship:

|

And you should create app/models/address.rb:

|

I should clarify this syntax for readers new to Ruby. belongs_to :person is a method (not a method definition) that takes a symbol as a parameter. (Look at a symbol as an immutable string for now.) The belongs_to method is a metaprogramming method that adds an association, called person, to address. Take a look at how it works. If your console is running, exit it and restart it with ruby script/console. Next, enter the following commands:

|

Before I talk about the relationship, look again at the findmethod, find_by_email. Active Record adds a custom finder for each of the attributes. (I've oversimplified things a bit, but this explanation works for now.)

Now, look at the last relationship. has_one :address adds an instance variable of type Address to person. The address is persisted as well; you can verify by entering Address.find_first in the console. So Active Record is actively managing the relationship.

You're not limited to simple one-to-one relationships, either. Change person.rb to look like this:

|

Make sure you pluralize address to addresses! Now, from the console, type these commands:

|

The person.addresses <<> command adds the address to an array of addresses. Active Record added a second address to person, as expected. You verified that there was one more record in the database. So has_many works like has_one, but it adds an array of addresses to each Person. In fact, Active Record lets you have a number of different relationships, including these:

belongs_to(many-to-one)has_one(one-to-one)has_many(one-to-many)has_and_belongs_to_many(many-to-many)inheritanceacts_as_treeacts_as_listcomposition(mapping more than one class to a table)

From the very beginning, Active Record has helped to evolve my understanding of persistence. I learned that wrapping approaches aren't necessarily inferior; they're just different. I'd still lean on mapping frameworks for some problems, like crufty legacy schemas. But I think Active Record has quite a large niche and will improve as the mappings supported by Active Record improve.

I also learned that an effective persistence framework should take on the character of the language. Ruby is highly reflective and uses a form of reflection to query the definition of database system tables.

But, as you'll see now, my Rails persistence experience did not begin and end with Active Record.

|

Evolving schema independently with migrations

As I learned Active Record, two problems plagued me. Active Record forced me to build create-table SQL scripts, tying me to an individual database implementation. Also, in development, I would often need to delete the database, which forced me to import all of my test data after each major change. I was dreading my first post-production push. Enter migrations, the Ruby on Rails solution for dealing with changes to a production database.

With Rails migrations, I could create a migration for each major change to the database. For this article's application, you could have two migrations. To see the feature in action, create two files. First, create a file called db/migrate/001_initial_schema.rb and make it look like this:

|

And in 002_add_addresses.rb, add this:

|

You can migrate up by typing:

|

rake is like Java's ant. Rails has a target called migrate that runs migrations. This migration adds your entire schema. Go ahead and add a few people, but don't worry about the addresses yet.

Now suppose you've made a terrible mistake and need to migrate down to a previous version. Type:

|

That command took the first migration (denoted by the version number in the filename) and applied the AddAddresses.down method. Migrating up calls up methods on all necessary migrations, in numerical order. Migrating down calls the down methods in reverse order. If you look at your database, you'll see only the people table. The address has been removed. So migrate lets you move up or down based on your needs.

Migrations have another feature: dealing with data. You can migrate up again by typing:

|

This command runs AddAddresses.up and, in the process, initializes each Person object with an Active Record address. You can verify this behavior in the console. If you've added Person objects, you should also have Address objects. Open a new console and count the number of person and address database rows, like this:

|

So migrations deftly handle both schema and data. Now you can look at how these ideas translate to what's happening on the Java side.

|

Java technology's persistence history is at once fascinating, tragic, and hopeful. Years of bad choices in the Java language's core persistence framework -- Enterprise JavaBeans (EJB) versions 1 and 2 -- led to years of struggling applications and disillusioned users. Hibernate and Java Data Objects (JDO), which both form the foundation of the new EJB persistence and a common persistence standard, led to a rise in object-relational mapping (ORM) within the Java community, and now the overall Java experience is a much more pleasant one.

The Java community has had a seven-year love affair with ORM, a.k.a. mapping frameworks. Fundamentally, a mapping approach lets a user define Java and database objects independently and build maps between them, as in Figure 1:

Figure 1. Mapping frameworks

Because the Java language is often an integration language first, mapping plays an important role for integrating crufty legacy systems, even those created long before any object-oriented languages existed. The Java community now embraces mapping to an incredible degree. Today, a typical Java programmer reaches for ORM to solve even basic problems. We like mapping because the Java mapping implementations often beat the Java implementations for alternative approaches: wrapping frameworks.

But you shouldn't disregard the power of wrapping frameworks. A wrapping framework places a thin wrapper around a database table, converting database rows to objects, as in Figure 2:

Figure 2. Wrapping frameworks

Your mapping layer carries a good deal of overhead if you don't need a map. And Java wrapping frameworks are seeing something of a resurgence. The Spring framework does JDBC wrapping and integrates features to do all kinds of enterprise integration. iBATIS wraps the result of a SQL statement instead of a table (see Resources). Both frameworks are brilliant pieces of work and underappreciated, in my opinion. But the typical Java mapping framework does things automatically that Java wrapping frameworks force you to do manually.

The Java platform already boasts state-of-the-art mapping frameworks, but I now believe that it needs a groundbreaking wrapping framework. Active Record relies on language capabilities to extend Rails classes on the fly. A Java framework could possibly simulate some of what Active Record offers, but creating something like Active Record would be challenging, possibly breaking three existing Java conventions:

- A persistence solution should work only on a Java POJO (plain old Java object). First and foremost, it would be difficult to create properties based on the contents of a database. A domain object might have a different API. Instead of calling

person.get_nameto set a property, you might useperson.get(name)instead. At the cost of static type checking, you'd get a class built of metadata driven from a database. - A persistence solution should express configuration in XML or annotations. Rails bucks this trend through forcing naming conventions with meaningful defaults, saving the user an incredible amount of repetition. The cost is not great because you can override defaults as needed with additional configuration code. Java frameworks could easily adopt the Rails convention-over-configuration paradigm.

- Schema migrations should be driven from the persistent domain model. Rails bucks this convention with migrations. The core benefit is the migration of both data and schema. Migrations also allow Rails to break the dependence on a relational database vendor. And the Rails strategy decouples the persistence strategy from the issue of schema migrations.

In each of these cases, Rails breaks long-standing conventions that Java framework designers have often held as sacred. Rails starts with a working schema and reflects on the schema to construct a model object. A Java wrapping framework might not take the same approach. Instead, to take advantage of Java's support for static typing (and the advantages of tools that recognize those types and provide features such as code completion), a Java framework would start with a working model and use Java's reflection and the excellent JDBC API to dynamically force that model out to the database.

One new Java framework that's moving toward sophistication as a wrapping framework is RIFE (and its subproject RIFE/Crud), created by Geert Bevin (see Resources). At the core, RIFE's persistence has three major layers, shown in Figure 3:

- A simple JDBC wrapper, which provides a callback-style implementation of JDBC through templates

- A set of database-independent SQL builders offering an object-oriented approach to query building

- A type-mapping layer that can convert most SQL types to most related Java types

Figure 3. Architecture of persistence for the RIFE framework

These layers are used by simplified APIs, called query-managers, which provide intuitive access to persistence-related tasks such as save and update. One is specific to JDBC, and the other provides an analogous API that detects metadata related to RIFE's content-management framework.

RIFE/Crud sits on top of all of these frameworks, providing a very small layer that groups all of them together. RIFE/Crud uses constraints and bean properties to build an application's user interface, the site structure, the persistence logic, and the business logic automatically. RIFE/Crud relies heavily on RIFE's metadata capabilities to generate the interface and the relevant APIs, but it still functions with POJOs. RIFE/Crud is completely extensible through RIFE's clearly defined integration points in its API, templates, and component architecture.

The query-managers' API is strikingly simple. Here's an example of RIFE's persistence model in action. Suppose you have an Article class and you want to build a table structure around it and persist two articles to the database. You use this code to get a query manager, given a data source:

|

Next, create the table structure in the database by installing the query manager:

|

Now you can use the query manager to access the database:

|

So, like Active Record, the RIFE framework uses convention over configuration. Article must support an ID property called id, or the user must specify the ID property using RIFE's API. And like Active Record, RIFE uses the capabilities of the native language. In this case, you still have a wrapping framework, but the model drives the schema instead of the other way around. And you still have a much simpler API than most object-relational mappers for many of the problems RIFE needs to solve. Better wrapping frameworks would serve Java well.

|

Active Record is a persistence model written in a non-Java language that takes good advantage of that language's capabilities. If you've not seen it before, I hope this discussion has opened your eyes to what's possible in a wrapping framework. You also saw migrations. In theory, Java frameworks could adopt this concept. Though mapping frameworks have their place, I hope you'll be able to use this knowledge to look beyond the conventional mapping solutions in the Java language and catch the wrapping wave. Next time you'll take a look into continuation-based approaches to Web development.

|

Learn

- Beyond Java (Bruce Tate, O'Reilly, 2005): The author's book about the Java code's rise and plateau and the technologies that could challenge the Java platform in some niches.

- "Rolling with Ruby on Rails" and Learn all about Ruby on Rails: Learn more about Ruby and Rails, including installation procedures.

- "Ruby on Rails and J2EE: Is there room for both?" (Aaron Rustad, developerWorks, July 2005): This article compares and contrasts some of the key architectural features of Rails and traditional J2EE frameworks.

- "Ruby off the Rails" (Andrew Glover, developerworks, December 2005): Andrew Glover digs beneath the hype for a look at what Java developers can do with Ruby, all by itself.

- Active Record: Active Record is the persistence framework for the Ruby on Rails framework.

- "Improve persistence with Apache iBATIS and Derby" (Daniel Wintschel, developerWorks, January 2006): Learn all about iBATIS, one of Java's best wrapping frameworks, in this three-part tutorial series.

- "The Spring series, Part 2: When Hibernate meets Spring" (Naveen Balani, developerWorks, August 2005): This article teaches the best combination for using Hibernate -- Spring with Hibernate.

- The Java technology zone: Hundreds of articles about every aspect of Java programming.

Get products and technologies

- The RIFE framework: RIFE uses some of the more radical techniques from non-Java languages.

- Ruby on Rails: Download the open source Ruby on Rails Web framework.

- Ruby: Get Ruby from the project Web site.

Discuss

- developerWorks blogs: Get involved in the developerWorks community.

|

| Bruce Tate is a father, mountain biker, and kayaker in Austin, Texas. He's the author of three best-selling Java books, including the Jolt winner Better, Faster, Lighter Java. He recently released Spring: A Developer's Notebook. He spent 13 years at IBM and is now the founder of the J2Life, LLC, consultancy, where he specializes in lightweight development strategies and architectures based on Java technology and Ruby. | |

source:http://www-128.ibm.com/developerworks/java/library/j-cb03076/?ca=dgr-lnxw01ActiveRecord

'No quick fix' from nuclear power

The UK's ageing nuclear plants are being phased out |

The Sustainable Development Commission (SDC) report says doubling nuclear capacity would make only a small impact on reducing carbon emissions by 2035.

The body, which advises the government on the environment, says this must be set against the potential risks.

The government is currently undertaking a review of Britain's energy needs.

It regards building nuclear capacity as an alternative to reliance on fossil fuels such as coal, oil and gas. ![]()

![]() The government is going to have to stop looking for an easy fix to our climate change and energy crises

The government is going to have to stop looking for an easy fix to our climate change and energy crises ![]()

![]()

As North Sea supplies dwindle, nuclear is seen by some as a more secure source of energy than hydrocarbon supplies from unstable regimes. Proponents say it could generate large quantities of electricity while helping to stabilise carbon dioxide CO2 emissions.

But the SDC report, compiled in response to the energy review, concluded that the risks of nuclear energy outweighed its advantages.

Pushing ahead

Jonathon Porritt, chairman of the SDC, commented: "There's little point in denying that nuclear power has benefits, but in our view, these are outweighed by serious disadvantages.

"The government is going to have to stop looking for an easy fix to our climate change and energy crises - there simply isn't one."

He said that the SDC had concluded that the long-term target of reducing carbon dioxide emissions could be met without nuclear power.

Energy minister Malcolm Wicks, who is leading the government's review, said the SDC's findings made an "important and thorough contribution" to the debate.

"Securing clean, affordable energy supplies for the long term will not be easy. No one has ever suggested that nuclear power - or any other individual energy source - could meet all of those challenges," Mr Wicks said.

"As the commission itself finds, this is not a black and white issue. It does, however, agree that it is right that we are assessing the potential contribution of new nuclear [plants]."

24-hour power

The Nuclear Industry Association (NIA), the representative body for the UK's nuclear sector, gave the report a more cautious welcome.

Philip Dewhurst, chairman of the NIA, said the SDC report was not as negative as it had feared.

"What the report is basically saying is that the government has got to make a choice between renewables and nuclear.

"The SDC is saying you cannot have both, but of course you can. We support having both renewables and nuclear," he told the BBC News website.

"The key factor about nuclear is its base load which means it keeps working 24 hours a day, seven days a week. Everyone would agree that some renewable technologies are intermittent at best."

Research by the SDC suggests that even if the UK's existing nuclear capacity was doubled, it would only provide an 8% cut on CO2 emissions by 2035 (and nothing before 2010).

While the SDC recognised that nuclear is a low carbon technology, with an impressive safety record in the UK, it identifies five major disadvantages:

- No long-term solutions for the storage of nuclear waste are yet available, says the SDC, and storage presents clear safety issues

- The economics of nuclear new-build are highly uncertain, according to the report

- Nuclear would lock the UK into a centralised energy distribution system for the next 50 years when more flexible distribution options are becoming available

- The report claims that nuclear would undermine the drive for greater energy efficiency

- If the UK brings forward a new nuclear programme, it becomes more difficult to deny other countries the same technology, the SDC claims

Future development

The panel does not rule out further research into new nuclear technologies and pursuing answers to the waste problem, as future technological developments may justify a re-examination of the issue.

But the report concludes that Britain can meet its energy needs without nuclear power.

"With a combination of a low carbon innovation strategy and an aggressive expansion of energy efficiency and renewables, the UK would become a leader in low carbon technologies," the SDC claims.

Critics of the government's energy review say it is a way to get nuclear power, touted as a possible solution by Tony Blair, back on the agenda.

Conservative energy spokesman Alan Duncan said ministers should pay attention to the commission's conclusions.

"This report puts a spanner in the works for the government, who everybody believes has already made up its mind in favour of nuclear."

The Tories are currently reviewing their energy policy. Zac Goldsmith, deputy chair of the party's environment policy review which is due to report in 18 months' time, is strongly opposed to nuclear power.

The Liberal Democrats have also attacked the economic uncertainties of nuclear power.

The Green Party says the government is determined to push ahead with nuclear power despite evidence that it is uneconomic.

The government is set to publish the findings from the energy review later this year.

source:http://news.bbc.co.uk/1/hi/sci/tech/4778344.stm

Finding hidden treasures in OpenOffice 2.0's Charting Wizard

I loved Nancy Drew mysteries. Somehow, Nancy always had a hunch about where to look, in the old clock or the mysterious ballet dancer's closet, to find the jewels, the glowing eye or the kidnapped heiress.

Nancy would have been great at doing charts in OpenOffice 2.0.

The chart features in OpenOffice are like a mystery-lover's dream vacation: a huge, mysterious old house with lots of long halls, secret bookcases, dark closets and creaky doors that, when you peer behind them, reveal wonderful secrets.

Now, I realize that all this mystery can be annoying if you don't have three months of summer to explore the charting features and you just want your darn scatterplot out in a few minutes. Fortunately, even though much of the power of OpenOffice charts is hidden, once you know it's available and where to find it, you can get to it much more easily.

So, here's your tour of the powerful, hidden charting jewels in OpenOffice 2.0.

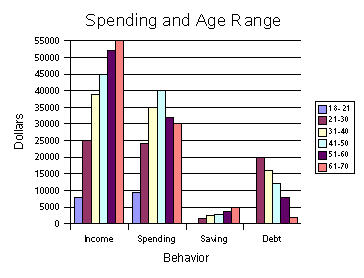

Setting up the Chart

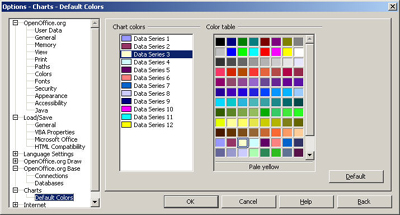

The chart generates colored lines, bars, or other shapes to show the data. Some of these colors are, well, yucky. Specify the colors you want to use ahead of time -- you can do it later, but this is more efficient.

Choose Tools > Options > Chart > Colors. For any colors you don't like, such as the pale yellow, just select something different. Click OK when you're done.

If you want to go the extra mile, you can even choose Tools > Options > OpenOffice.org > Color and make your own colors.

When you're modifying the chart later, you can change the colors too, and you can also use other filler like gradients or even graphics to fill the bar, line, etc.

Making the Chart

Making a chart is fairly simple. There are a few checkboxes you definitely want to mark, however, and a few lists that you should scroll through to see all the options.

The basics:

- You make a table, in Writer

- Or you just type up your data, in Calc.

- Select it all, including the titles

- choose Insert > Object > Chart (Writer) or Insert > Chart (Calc).

.

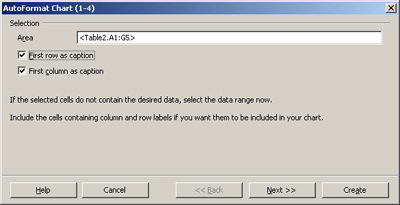

The first window appears, showing the range of data

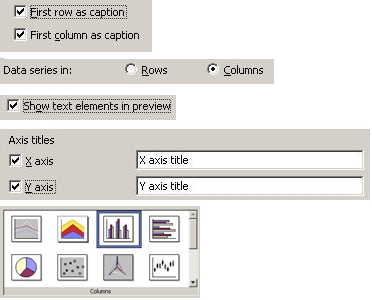

Click Next to go from one window to the other, and specify how you want the chart. At the end, click Create, and the chart appears.

Finding the important selections along the way

It's tempting to just whiz through and accept the defaults, but you miss stuff that way. When you create the chart, always mark everything you can. If you have the option, as you do here, for labels on the X and Y axes, do it. When you have the option to switch data from rows to columns, try it and see the effect. Scroll through all the options in any window of chart types and variations. Whenever you see any of these items in the wizard, mark them and/or fiddle with them to see how it affects the results.

Modifying the Chart: The Tools

You've got your chart and it looks great.

But you just need to change a few things. What do you do to change it? There aren't any attached "change this" or "update this" buttons. It just sits there and stares at you.

What it lacks in obviousness, OpenOffice makes up for in the many ways to find the tools. They're in four places. You can see these options once you've double-clicked in the chart and at any point thereafter.

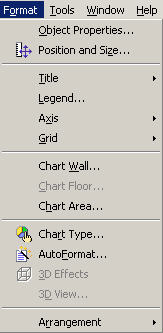

- Format menu

- Formatting toolbar

- Right-click and view the context menu. (One of my favorite things to say in training classes is, "when in doubt, right-click." It's good in any situation, not just charts.)

4. Just double-click on what you want

Note: Especially when double-clicking and right-clicking, do so on exactly the item you want to modify.

Of the options listed, Object Properties is especially useful. Chart Wall is the data portion of the chart and Chart Area is the whole chart.

Modifying the Chart: Selecting it the Right Way

Here's a summary of how you need to select a chart in order to use those modification tools. Think of it as an overall guide to the OpenOffice.org equivalent of "push the spine of War and Peace, twist the third candle from the left and the hidden bookcase will twirl around to show you the hidden passageway down to the mad scientist's laboratory."

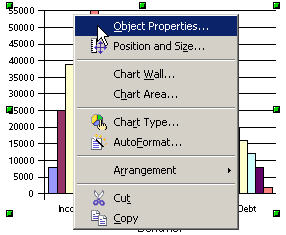

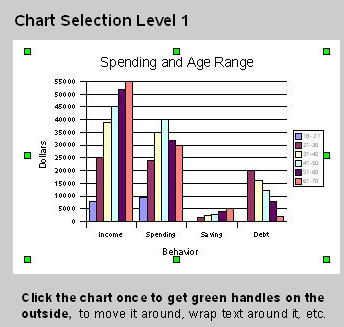

- Select once for green handles to move the chart.

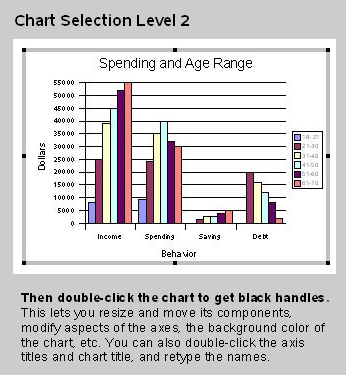

- Then double-click, for black handles that let you resize and modify things like the background color of the chart.

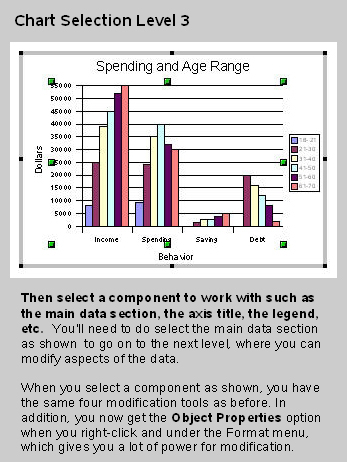

- Then, click again on a particular component to modify it, like the legend, a chart title, the main data section, etc.

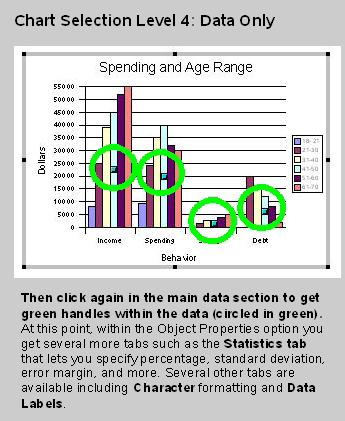

- (Main data section only) Click in the data area to see green handles and modify additional aspects of the data display like statistics.

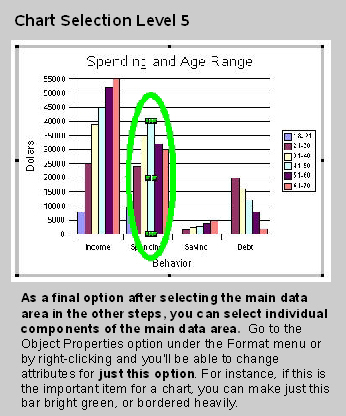

- (Main data section only) Click on a particular component like one bar in the graph to modify aspects of just that component.

The descriptions of the handles aren't as good as seeing them. Here's what each level looks like, with a summary of what you can do at each level.

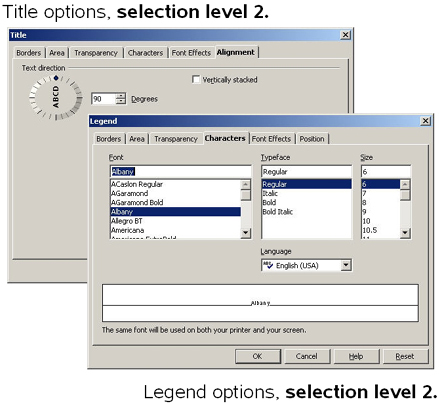

Here are some of the options you can get from right-clicking and choosing to modify the title, the axes and the legend.

If you're not seeing what you need to at this point, go on to level 4.

Here's the difference in Object Properties between level 3 and 4, for the main data area.

The options for level 5 look pretty much the same as before. The only difference: you just get to apply the options very specifically to the individual selected component.

I would not go out of my way to nominate the OpenOffice.org chart interface for the "Easy-Breezy Intuitive Powerful Yet Simple" award. It is a good tool, once you know where the secret passageways are and how to twist the third candle from the left to get at all the cool tools. A warning: if you get up in the middle of the night to do some charting and you hear violins playing behind the Object Properties window -- don't even think about following it.

Solveig Haugland has worked as a technical writer, course developer, instructor and author in the high-tech industry for twelve years, including six years at Microsoft Great Plains, three years at Sun, and year at BEA. Currently, Solveig is a StarOffice and OpenOffice.org instructor, author, and freelance technical writer. She is also co-author, with Floyd Jones, of three how-to books: Staroffice 5.2 Companion, Staroffice 6.0 Office Suite Companion, and OpenOffice.Org 1.0 Resource Kit, published by Prentice Hall PTR. For more tips on working in OpenOffice, visit Solveig's OpenOffice blog.

source:http://searchopensource.techtarget.com/tip/1,289483,sid39_gci1171394,00.html

Tougher hacking laws get support

The hacking measures enjoy some cross-party support |

Anyone hacking a computer could be punished with 10 years' imprisonment under new laws.

The move follows campaigning from Labour MP Tom Harris, whose ideas are now being adopted in the Police and Justice Bill.

There will be a clearer outlawing of offences like denial-of-service attacks in which systems are debilitated.

Typically, this is done by massively overloading the system and thereby exhausting its computing power.

'Perfectly sensible'

The bill - which was being debated for the first time in the House of Commons on Monday - would also boost the penalty for using hacking tools.

Home Secretary Charles Clarke said: "We need to recognise that in our increasingly interdependent world, work with international partners to tackle terrorism and serious organised crime will be increasingly important.

"One of the growing new threats that can only be tackled through extensive international cooperation is the continued threat posed by computing hacking and denial-of-service attacks."

During the first debate on the legislation, Nick Herbert, for the Tories, criticised much of the rest of the bill, but said measures to tackle hacking were "perfectly sensible" and would enjoy support from across the Commons.

For the Liberal Democrats, Lynne Featherstone also said there was support for measures on computer hacking, while dismissing the bill as a whole as pernicious.

The bill passed on to its second reading without a vote.

source:http://news.bbc.co.uk/1/hi/technology/4781608.stm

Wal-Mart Enlists Bloggers in P.R. Campaign

It was the kind of pro-Wal-Mart comment the giant retailer might write itself. And, in fact, it did.

Several sentences in Mr. Pickrell's Jan. 20 posting — and others from different days — are identical to those written by an employee at one of Wal-Mart's public relations firms and distributed by e-mail to bloggers.

Under assault as never before, Wal-Mart is increasingly looking beyond the mainstream media and working directly with bloggers, feeding them exclusive nuggets of news, suggesting topics for postings and even inviting them to visit its corporate headquarters.

But the strategy raises questions about what bloggers, who pride themselves on independence, should disclose to readers. Wal-Mart, the nation's largest private employer, has been forthright with bloggers about the origins of its communications, and the company and its public relations firm, Edelman, say they do not compensate the bloggers.

But some bloggers have posted information from Wal-Mart, at times word for word, without revealing where it came from.

Glenn Reynolds, the founder of Instapundit.com, one of the oldest blogs on the Web, said that even in the blogosphere, which is renowned for its lack of rules, a basic tenet applies: "If I reprint something, I say where it came from. A blog is about your voice, it seems to me, not somebody else's."

Companies of all stripes are using blogs to help shape public opinion.

Before General Electric announced a major investment in energy-efficient technology last year, company executives first met with major environmental bloggers to build support. Others have reached out to bloggers to promote a product or service, as Microsoft did with its Xbox game system and Cingular Wireless has done in the introduction of a new phone.

What is different about Wal-Mart's approach to blogging is that rather than promoting a product — something it does quite well, given its $300 billion in annual sales — it is trying to improve its battered image.

Wal-Mart, long criticized for low wages and its health benefits, began working with bloggers in late 2005 "as part of our overall effort to tell our story," said Mona Williams, a company spokeswoman.

"As more and more Americans go to the Internet to get information from varied, credible, trusted sources, Wal-Mart is committed to participating in that online conversation," she said.

Copies of e-mail messages that a Wal-Mart representative sent to bloggers were made available to The New York Times by Bob Beller, who runs a blog called Crazy Politico's Rantings. Mr. Beller, a regular Wal-Mart shopper who frequently defends the retailer on his blog, said the company never asked that the messages be kept private.

In the messages, Wal-Mart promotes positive news about itself, like the high number of job applications it received at a new store in Illinois, and criticizes opponents, noting for example that a rival, Target, raised "zero" money for the Salvation Army in 2005, because it banned red-kettle collectors from stores.

The author of the e-mail messages is a blogger named Marshall Manson, a senior account supervisor at Edelman who writes for conservative Web sites like Human Events Online, which advocates limited government, and Confirm Them, which has pushed for the confirmation of President Bush's judicial nominees.[Text: A PDF copy of an e-mail exchange between Mr. Manson and Rob Port, of Sayanythingblog.com.]

In interviews, bloggers said Mr. Manson contacted them after they wrote postings that either endorsed the retailer or challenged its critics.

Mr. Beller, who runs Crazy Politico's Rantings, for example, said he received an e-mail message from Mr. Manson soon after criticizing the passage of a law in Maryland that requires Wal-Mart to spend 8 percent of its payroll on health care.

Mr. Manson, identifying himself as a "blogger myself" who does "online public affairs for Wal-Mart," began with a bit of flattery: "Just wanted you to know that your post criticizing Maryland's Wal-Mart health care bill was noticed here and at the corporate headquarters in Bentonville," he wrote, referring to the city in Arkansas.

"If you're interested," he continued, "I'd like to drop you the occasional update with some newsworthy info about the company and an occasional nugget that you won't hear about in the M.S.M." — or mainstream media.

Bloggers who agreed to receive the e-mail messages said they were eager to hear Wal-Mart's side of the story, which they said they felt had been drowned out by critics, and were tantalized by the promise of exclusive news that might attract more visitors to their Web sites.

"I am always interested in tips to stories," said one recipient of Mr. Manson's e-mail messages, Bill Nienhuis, who operates a Web site called PunditGuy.com.

But some bloggers are also defensive about their contacts with Wal-Mart. When they learned that The New York Times was looking at how they were using information from the retailer, several bloggers posted items challenging The Times's article before it had appeared. One blog, Iowa Voice, run by Mr. Pickrell, pleads for advertisers to buy space on the blog in anticipation of more traffic because of the article.

The e-mail messages Mr. Manson has sent to bloggers are structured like typical blog postings, with a pungent sentence or two introducing a link to a news article or release.

John McAdams, a political science professor at Marquette University who runs the Marquette Warrior blog, recently posted three links about union activity in the same order as he received them from Mr. Manson. Mr. McAdams acknowledged that he worked from Wal-Mart's links and that he did not disclose his contact with Mr. Manson.

"I usually do not reveal where I get a tip or a lead on a story," he said, adding that journalists often do not disclose where they get ideas for stories either.

Wal-Mart has warned bloggers against lifting text from the e-mail it sends them. After apparently noticing the practice, Mr. Manson asked them to "resist the urge," because "I'd be sick if someone ripped you because they noticed a couple of bloggers with nearly identical posts."

But Mr. Manson has not encouraged bloggers to reveal that they communicate with Wal-Mart or to attribute information to either the retailer or Edelman, Ms. Williams of Wal-Mart said.

To be sure, some bloggers who post material from Mr. Manson's e-mail do disclose its origins, mentioning Mr. Manson and Wal-Mart by name. But others refer to Mr. Manson as "one reader," say they received a "heads up" about news from Wal-Mart or disclose nothing at all.

Mr. Pickrell, the 37-year-old who runs the Iowa Voice blog, said he began receiving updates from Wal-Mart in January. Like Mr. Beller, of Crazy Politico, Mr. Pickrell had criticized the Maryland legislature over its health care law before Wal-Mart contacted him.

Since then, he has written at least three postings that contain language identical to sentences in e-mail from Mr. Manson. In one, which Mr. Pickrell attributed to a "reader," he reported that Wal-Mart was about to announce that a store in Illinois received 25,000 applications for 325 jobs. "That's a 1.3 percent acceptance rate," the message read. "Consider this: Harvard University (undergraduate) accepts 11 percent of applicants. The Navy Seals accept 5 percent of applicants."

Asked in a telephone interview about the resemblance of his postings to Mr. Manson's, Mr. Pickrell said: "I probably cut and paste a little bit and I should not have," adding that "I try to write my posting in my own words."

In an e-mail message sent after the interview, Mr. Pickrell said he received e-mail from many groups, including those opposed to Wal-Mart, which he uses as a starting point to "do my own research on a topic."

"I draw my own conclusions when I form my opinions," he said.

Mr. Pickrell, explaining his support for Wal-Mart, said he shops there regularly and is impressed with how his mother-in-law, a Wal-Mart employee, is treated. "They go real out of their way for their people," he said.

Wal-Mart's blogging initiative is part of a ballooning public relations campaign developed in consultation with Edelman to help Wal-Mart as two groups, Wal-Mart Watch and Wake Up Wal-Mart, aggressively prod it to change. The groups operate blogs that receive posts from current and former Wal-Mart employees, elected leaders and consumers.

Edelman also helped Wal-Mart develop a political-style war room, staffed by former political operatives, which monitors and responds to the retailer's critics, and helped create Working Families for Wal-Mart, a new group that is trying to build support for the company in cities across the country.

At Edelman, Mr. Manson, who sends many of the e-mail messages to bloggers, works closely on the Wal-Mart account with Mike Krempasky, a co-founder of RedState.org, a conservative blog. Both are regular bloggers, which in Mr. Manson's case means he has written critically of individuals and groups Wal-Mart may eventually call on for support.

Before he was hired by Edelman in November, Mr. Manson wrote on the Human Events Online blog that members of the San Francisco city council were "dolts" and "twits" for rejecting a proposed World War II memorial and that every day "the United Nations slides further and further into irrelevance." After he was hired, Mr. Manson wrote that the career of Senator Lincoln Chafee of Rhode Island was marked by "pointless indecision."

Wal-Mart declined to make Mr. Manson available for comment. Ms. Williams said, "It is not Wal-Mart's role to monitor the opinions of our consultants or how they express them on their own time."

In a sign of how eager Wal-Mart is to develop ties to bloggers, the company has invited them to a media conference to be held at its headquarters in April. In e-mail messages, Wal-Mart has polled several bloggers about whether they would make the trip, which the bloggers would have to pay for themselves.

Mr. Reynolds of Instapundit.com said he recently was invited to Wal-Mart's offices but declined. "Bentonville, Arkansas," he said, "is not my idea of a fun destination."

source:http://www.nytimes.com/2006/03/07/technology/07blog.html?ex=1299387600&en=ae7585374bf280b9&ei=5088&partner=rssnyt&emc=rss